Site-to-Site with IPsec

Remote access and site-to-site with IPsec and OpenVPN

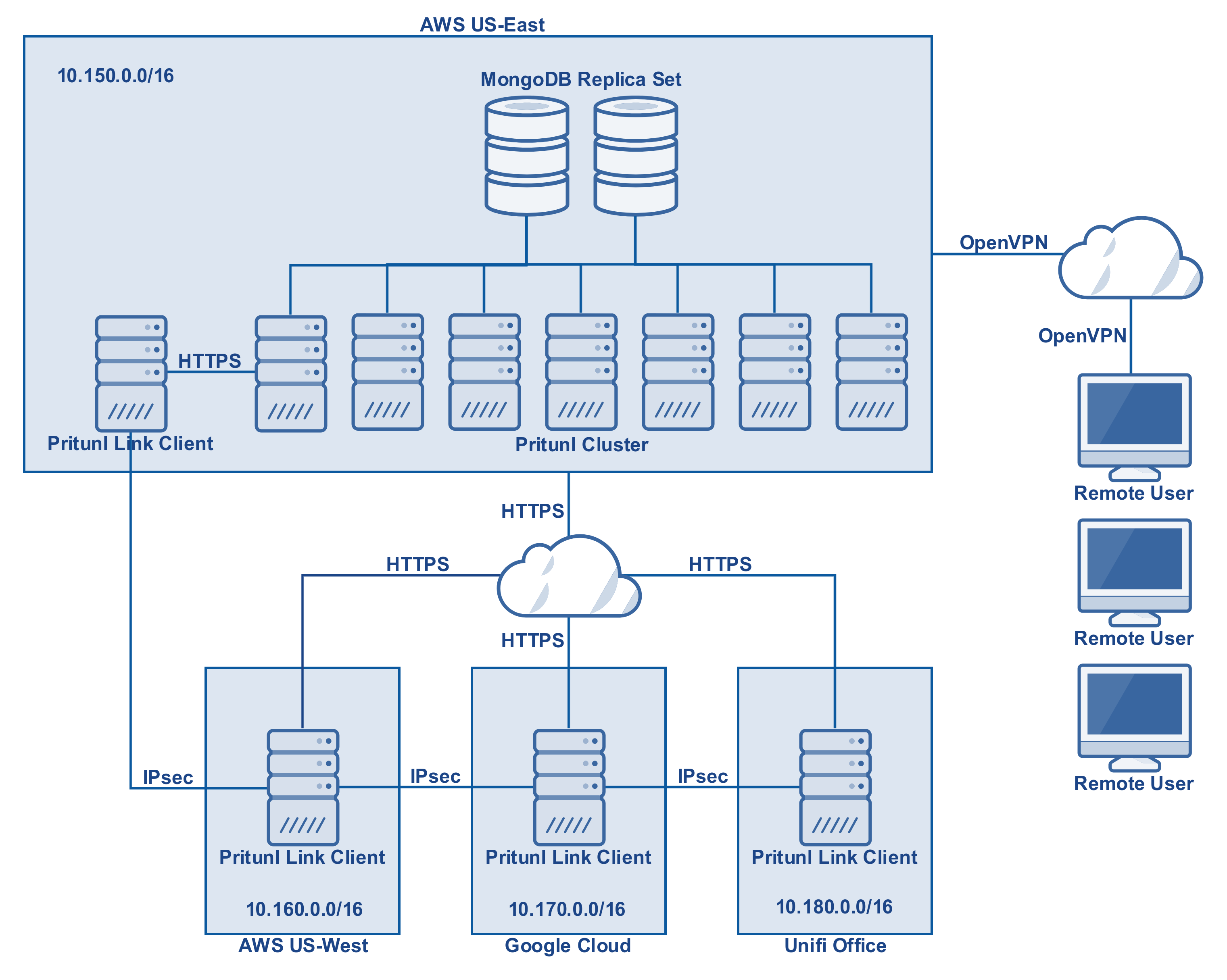

This tutorial will describe configuring site-to-site with IPsec and remote access with OpenVPN. The diagram below shows the network topology for this tutorial.

MongoDB Server

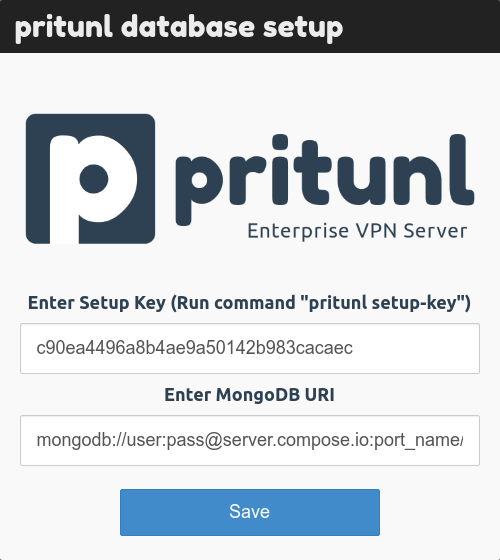

A MongoDB cluster will need to be created for the Pritunl servers to connect to. Services such as MongoDB Atlas or Compose can be used to deploy a managed MongoDB cluster in the same AWS region.

Initial Setup

After a MongoDB cluster has been deployed all the Pritunl servers must be configured to connect to the same MongoDB cluster. If a Pritunl server has already configured the MongoDB uri it can be reconfigured by running the command pritunl reconfigure followed by restarting the Pritunl service.

Source/Dest Check

When creating servers on AWS ensure that the source/dest check is disabled. On Google Cloud IP forwarding must be enabled.

Configure Links

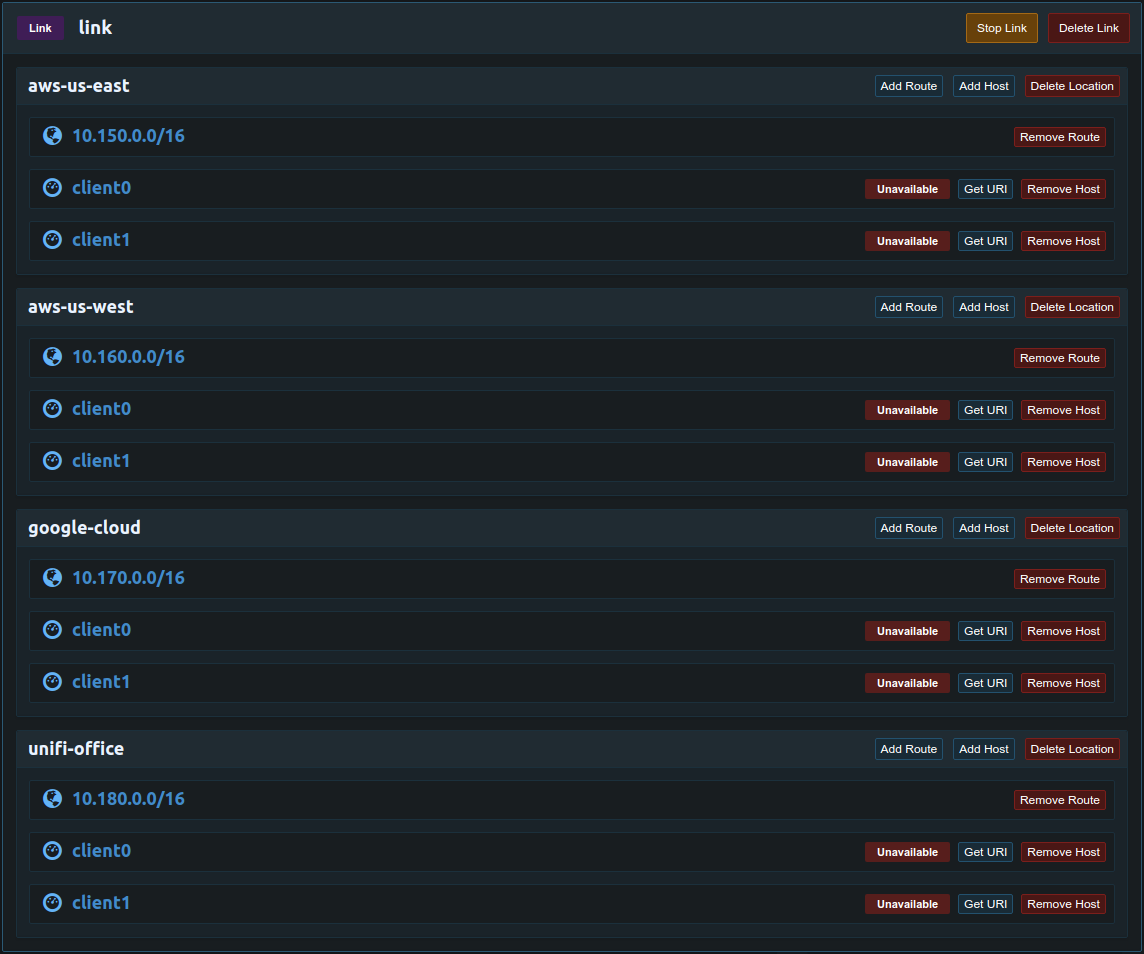

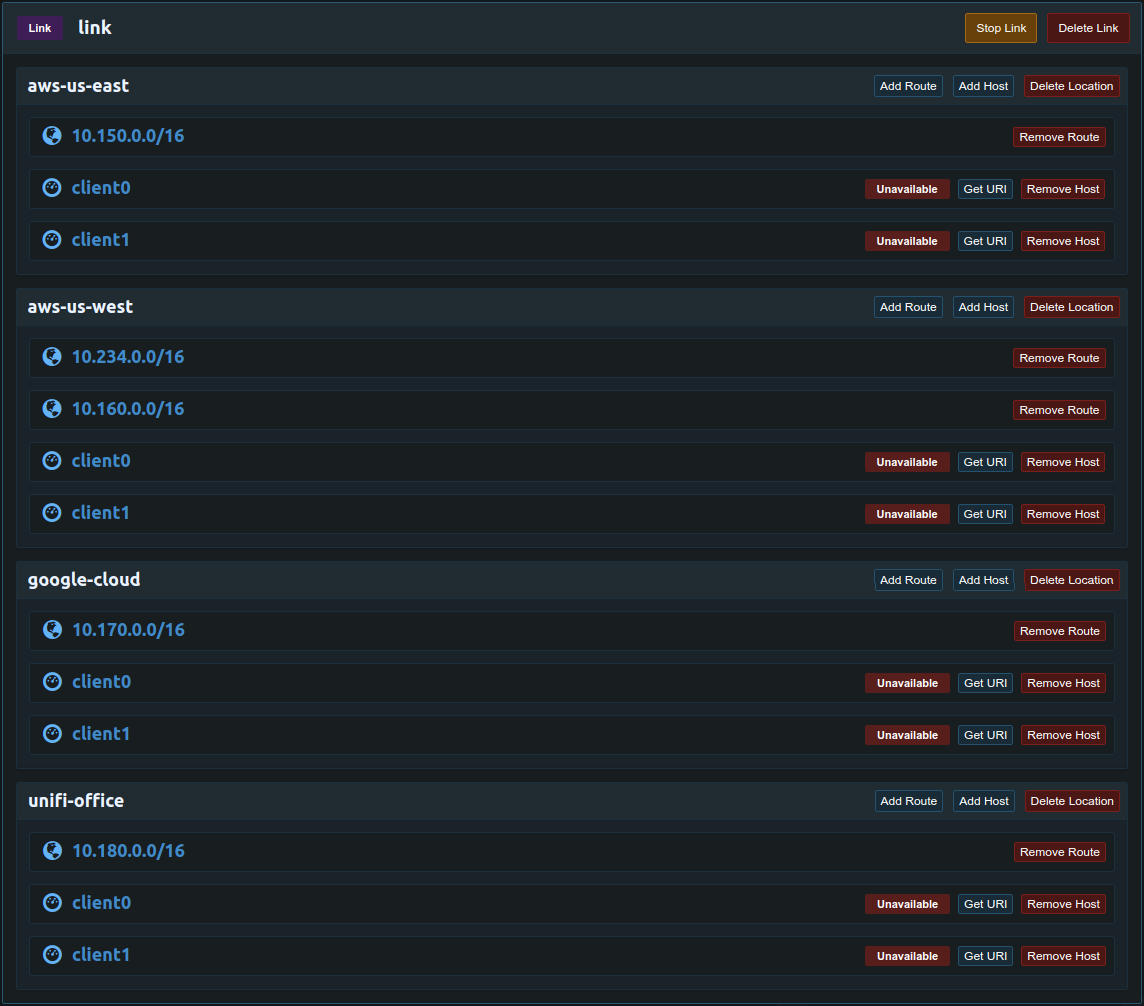

Once the Pritunl and MongoDB cluster has been deployed configure the links. Below is the configuration for the diagram above with two link clients in each network for failover.

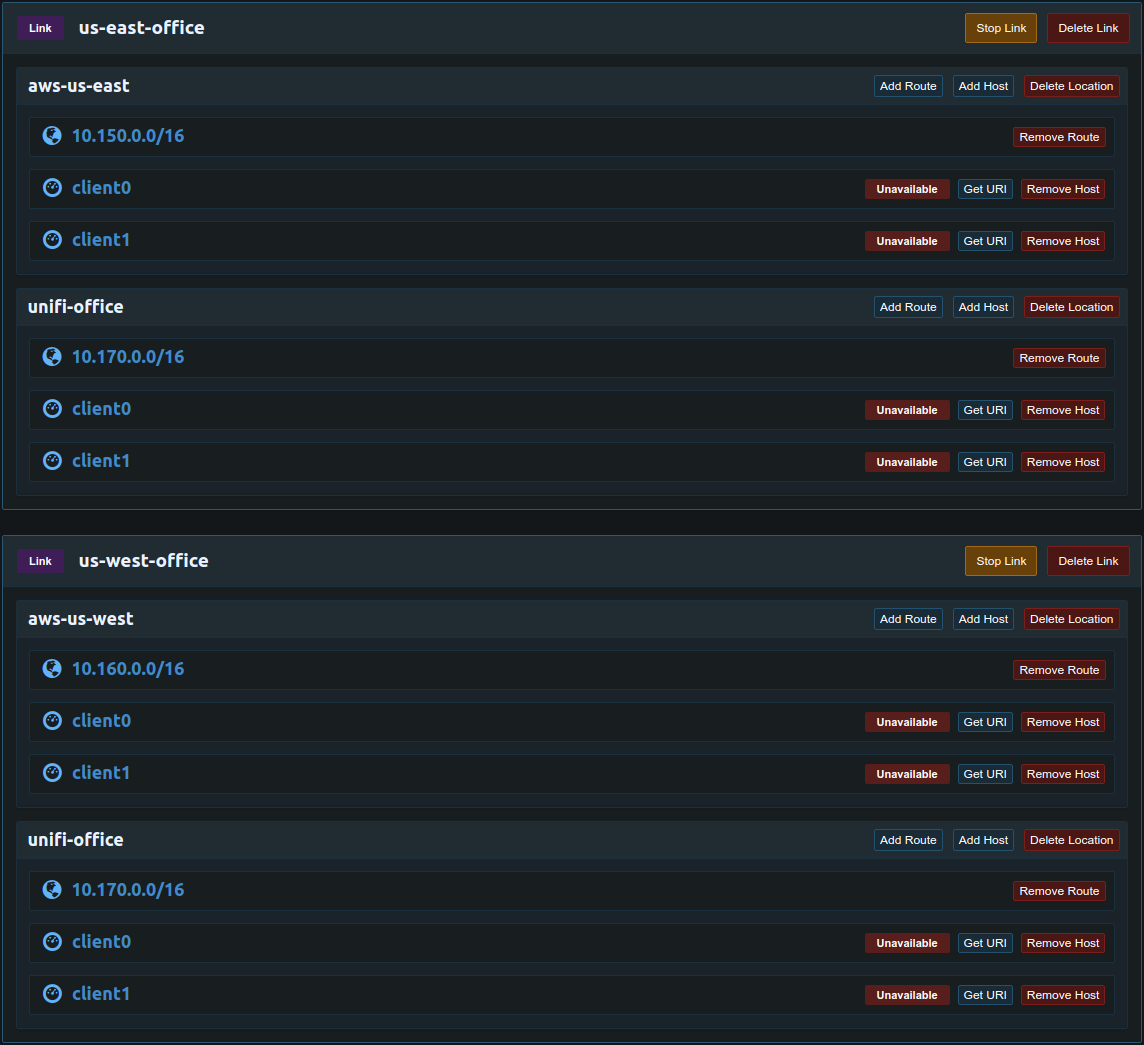

Pritunl Link clients can have multiple URIs added. This is useful for a configuration involving complex link configurations or multiple Pritunl clusters. The example below can be done to allow the office to communicate with both VPCs but not allow traffic between the VPCs. The section on host priority should be read before doing these configurations.

Deploy Link Clients

Next deploy the link clients, for the section above two clients will be deployed in each network. The instructions for each platform can be found here.

AWS

Google Cloud

Ubiquiti Unifi

Server Configuration

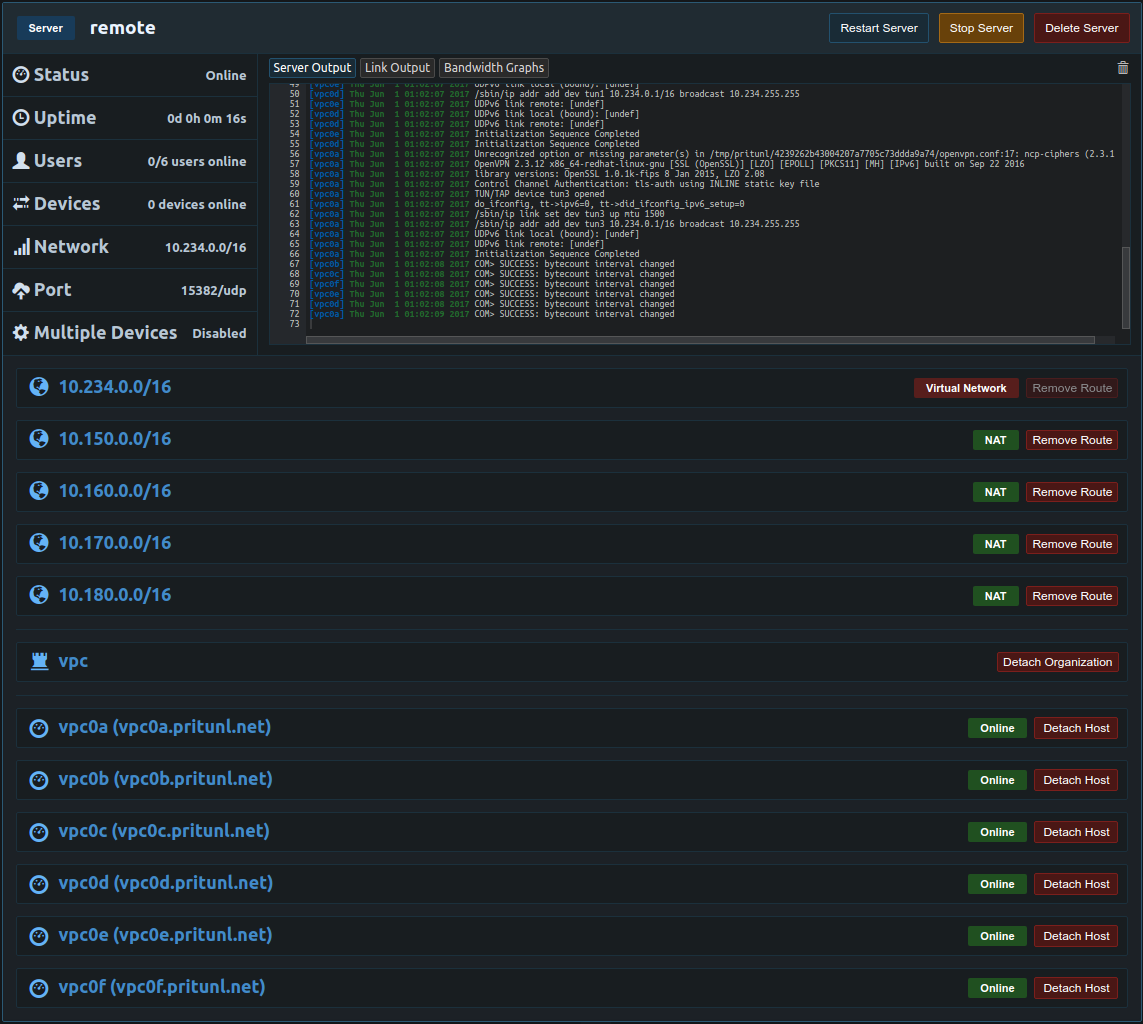

Once the links are deployed the VPCs and office network will be able to communicate. An OpenVPN server will be deployed to allow remote users to access all the networks. Below is the configuration for the server. In this example a replicated set of six Pritunl hosts will be used. When using replication the replication count should be equal to the number of hosts attached to the server. All the networks added in the links above should be added to the server.

Once done the Pritunl cluster is ready to use, refer to the single sign-on tutorials for delegating user access.

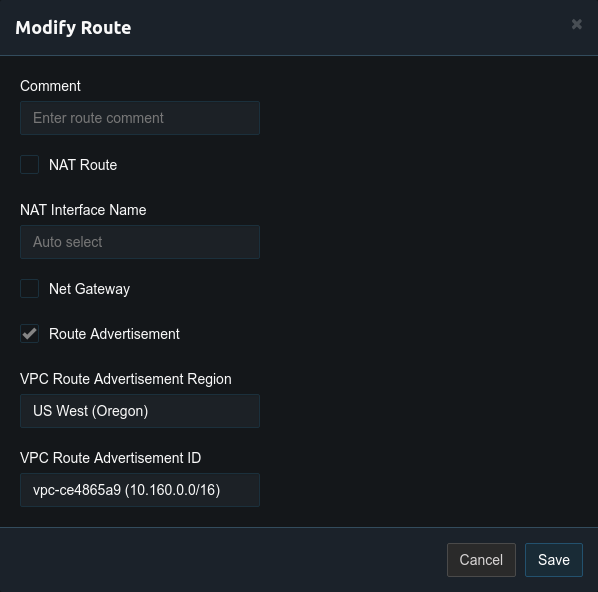

Non-NAT Routing

In the server configuration above NAT is used for the networks. This is easy to configure but when remote VPN users access resources on the networks their IP address will be the local IP address of the Pritunl server. Often it is preferred to have the VPN users access network resources from their VPN address. This allows controlling VPN user access using security groups or firewall rules. To do this route advertisement must be enabled on the Pritunl server. First if the Pritunl servers have EC2 roles configured with VPC access open the settings and set the both the access key and secret key to role for the region that the Pritunl servers are in. If EC2 roles are not configured enter a secret key and access key from IAM. Once done open the settings for the route that is labeled Virtual Network and select Route Advertisement. Then select the region and VPC that the Pritunl servers are in. In this example the six Pritunl servers are in the 10.160.0.0/16 VPC in US West (Oregon).

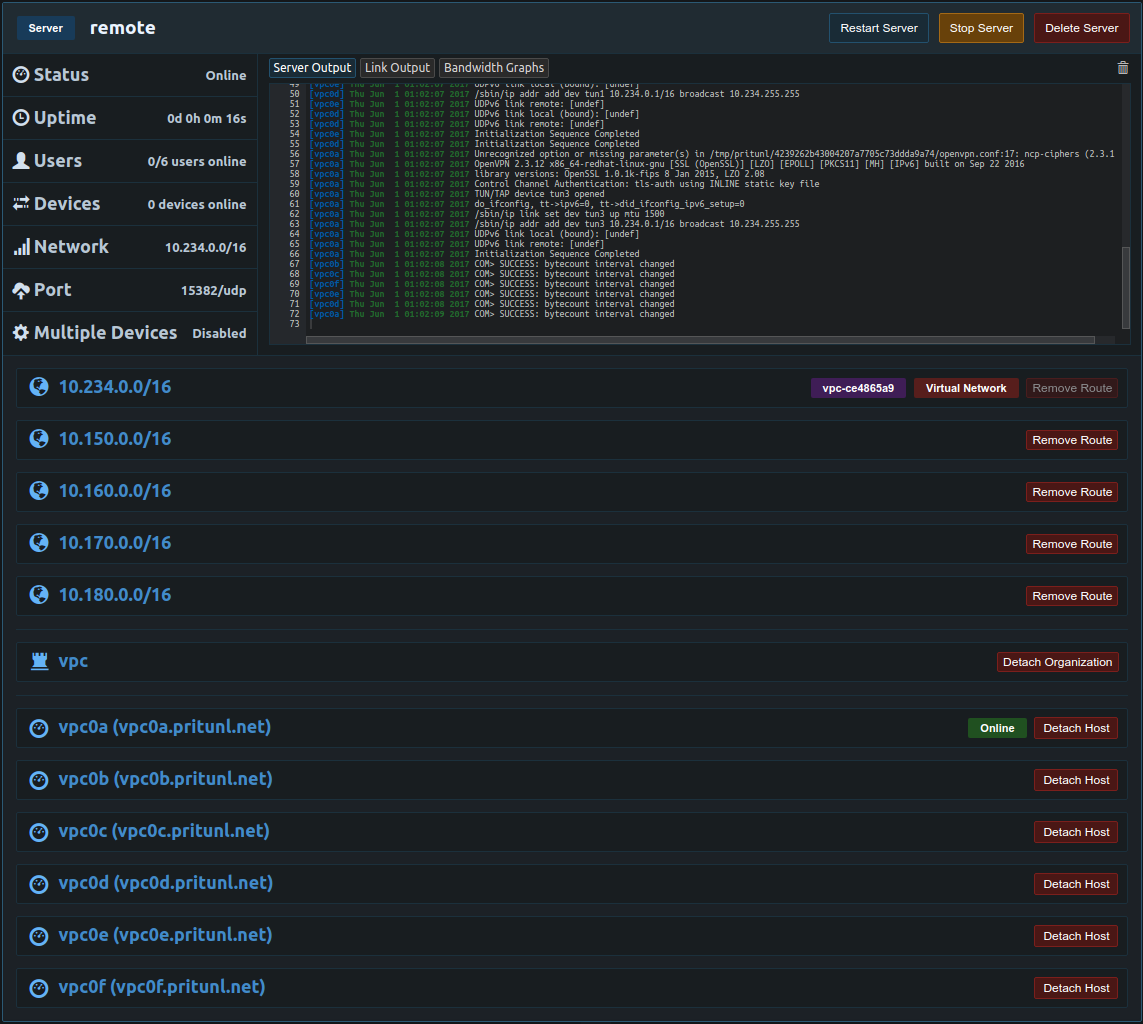

Next open the settings for the other routes and uncheck NAT. Once done the server configuration should look similar to the example below. Only the virtual network should have route advertisement enabled. This will automatically add the virtual network 10.234.0.0/16 to the VPC routing table. With this configuration replication can't be used with non-NAT routes, below is instructions for replication with non-NAT routes.

Next add the virtual network from above to the link location in the same VPC. In this example the Pritunl server is in the aws-us-west location.

Replication with Non-NAT Routing

For replication to work with non-NAT routing layer 2 network access is required. This is only available on AWS between instances that are both in the same region and availability zone. This is not possible on Google Cloud. Before starting VXLan Routing must be disabled from the advanced server settings. Running all the instances in one availability zone could cause downtime if the zone went offline, to fix this host availability groups are used. When Pritunl runs replicated servers it will only run replicas within the same availability group, by default hosts use the default group so all hosts will be used. The example below has two hosts in each availability zone and the host availability gruop is set to the name of the availability zone. The replica count should be set to the maximum number of host in a zone, in this example the replication count is two. Once the Pritunl server starts only one pair of servers in the same availability zone will start. If one host were to go down the replication will switch to a zone that yields the most number of replicas online. The Replication Group is set in the advanced host settings.

vpc0a - Replication Group: us-west-2a

vpc0b - Replication Group: us-west-2a

vpc0c - Replication Group: us-west-2b

vpc0d - Replication Group: us-west-2b

vpc0e - Replication Group: us-west-2c

vpc0f - Replication Group: us-west-2c

Updated about 2 months ago