phoenixNAP

Configure multi-region Pritunl Cloud on phoenixNAP

This tutorial will create a single host Pritunl Cloud server on a phoenixNAP Bare Metal Cloud bare metal server. phoenixNAP provides bare metal servers in Phoenix, Ashburn, Chicago and several other locations.

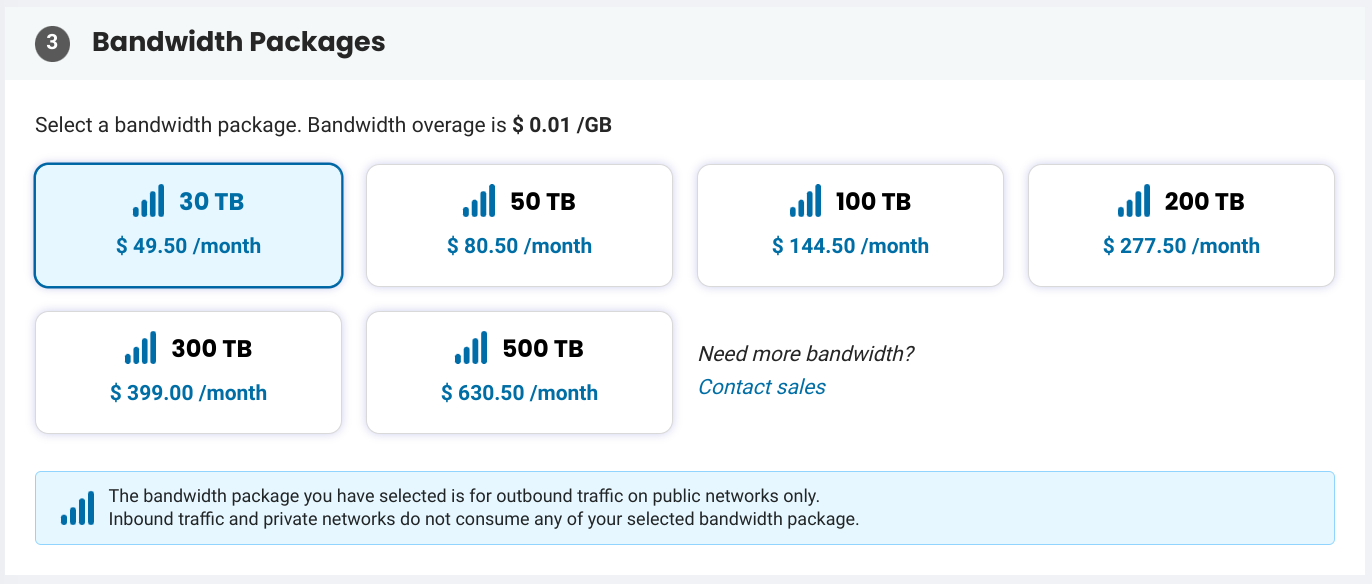

phoenixNAP Bandwidth

Servers have 10gbps uplinks with 15tb bandwidth included. Additional bandwidth can be purchased in blocks.

Bandwidth testing between Vultr New Jersey and phoenixNAP Ashburn measured 9.3gbps, ping measured 7.6ms.

Testing between Oracle Cloud Ashburn and phoenixNAP Ashburn measured 9.4gbps, ping measured 0.5ms.

# Vultr New Jersey - phoenixNAP Ashburn

iperf3 -t 10 -P 10

[SUM] 0.00-10.00 sec 10.9 GBytes 9.38 Gbits/sec 11 sender

[SUM] 0.00-10.01 sec 10.9 GBytes 9.36 Gbits/sec receiver

ping

icmp_seq=1 ttl=52 time=7.67 ms

# Oracle Cloud Ashburn - phoenixNAP Ashburn

iperf3 -t 10 -P 10

[SUM] 0.00-10.00 sec 11.0 GBytes 9.43 Gbits/sec 1840 sender

[SUM] 0.00-10.00 sec 11.0 GBytes 9.41 Gbits/sec receiver

ping

icmp_seq=1 ttl=59 time=0.525 msMongoDB Atlas

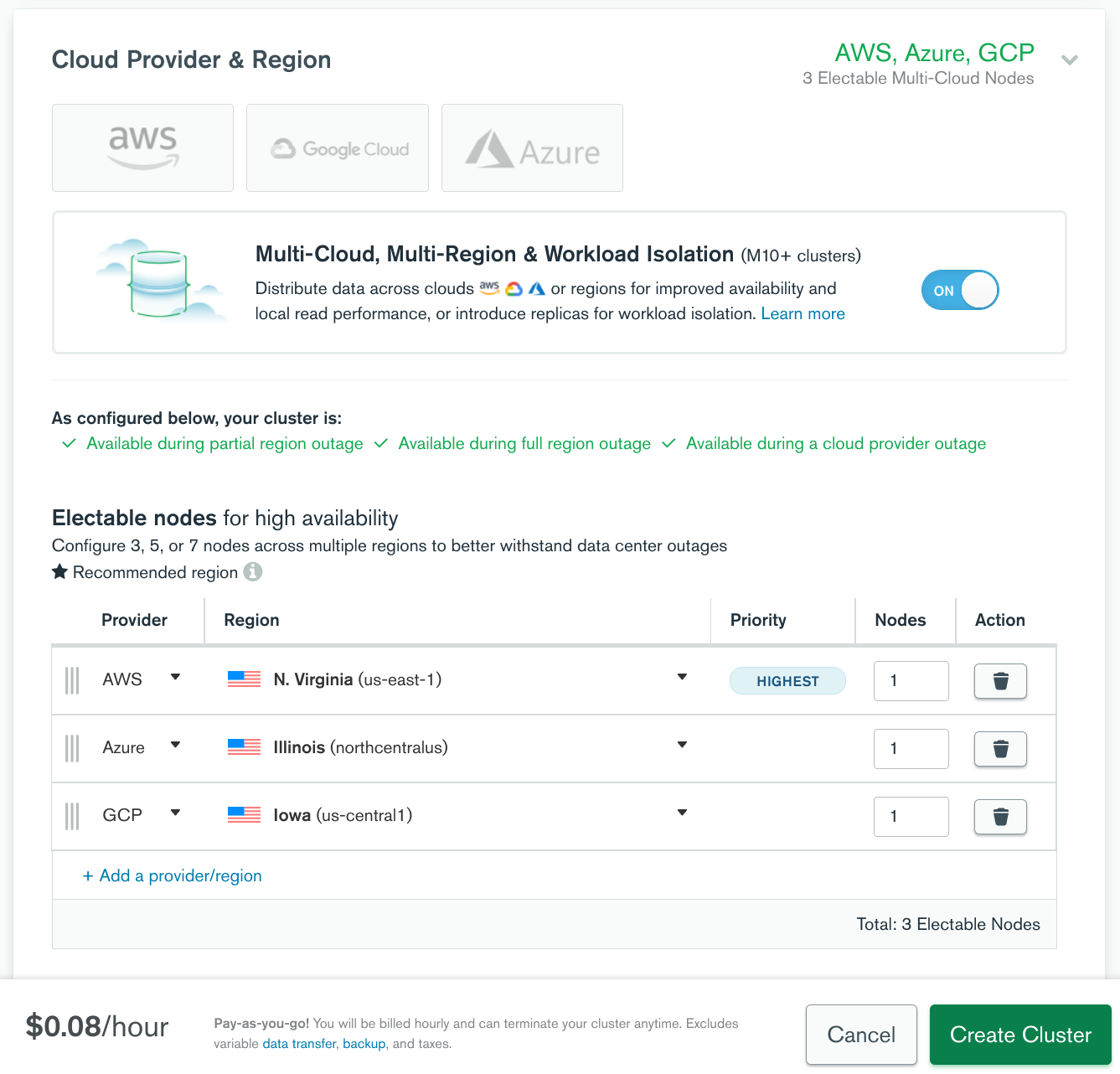

This tutorial will run the MongoDB server on the Pritunl Cloud bare metal server. This works for single host configurations but for multi-host Pritunl Cloud clusters a MongoDB replica set should be used. MongoDB Atlas can provision multi-cloud and multi-region clusters to provide availability even in the event of a full region or full cloud provider outage.

Below is an example MongoDB Atlas configuration that will provision 3 replicas across 3 different cloud providers in 3 different states. To use MongoDB Atlas enter no when prompted to install MongoDB and then copy the MongoDB URI from Atlas when prompted in the Pritunl Cloud builder.

Additionally Pritunl Cloud instances will continue to function in the event that the entire MongoDB database becomes unavailable. The management web console will no longer function and instance configuration changes cannot be made but the currently active instances will continue to function.

This is also true of the Pritunl Cloud process. A state based design as opposed to event based is used for Pritunl Cloud. This allows the pritunl-cloud process to be stopped at any time without effecting the instances running. Once the process starts again the full system state is parsed and compared to the state in the database then any changes made while the process was not running are applied. This also allows updating Pritunl Cloud with zero downtime.

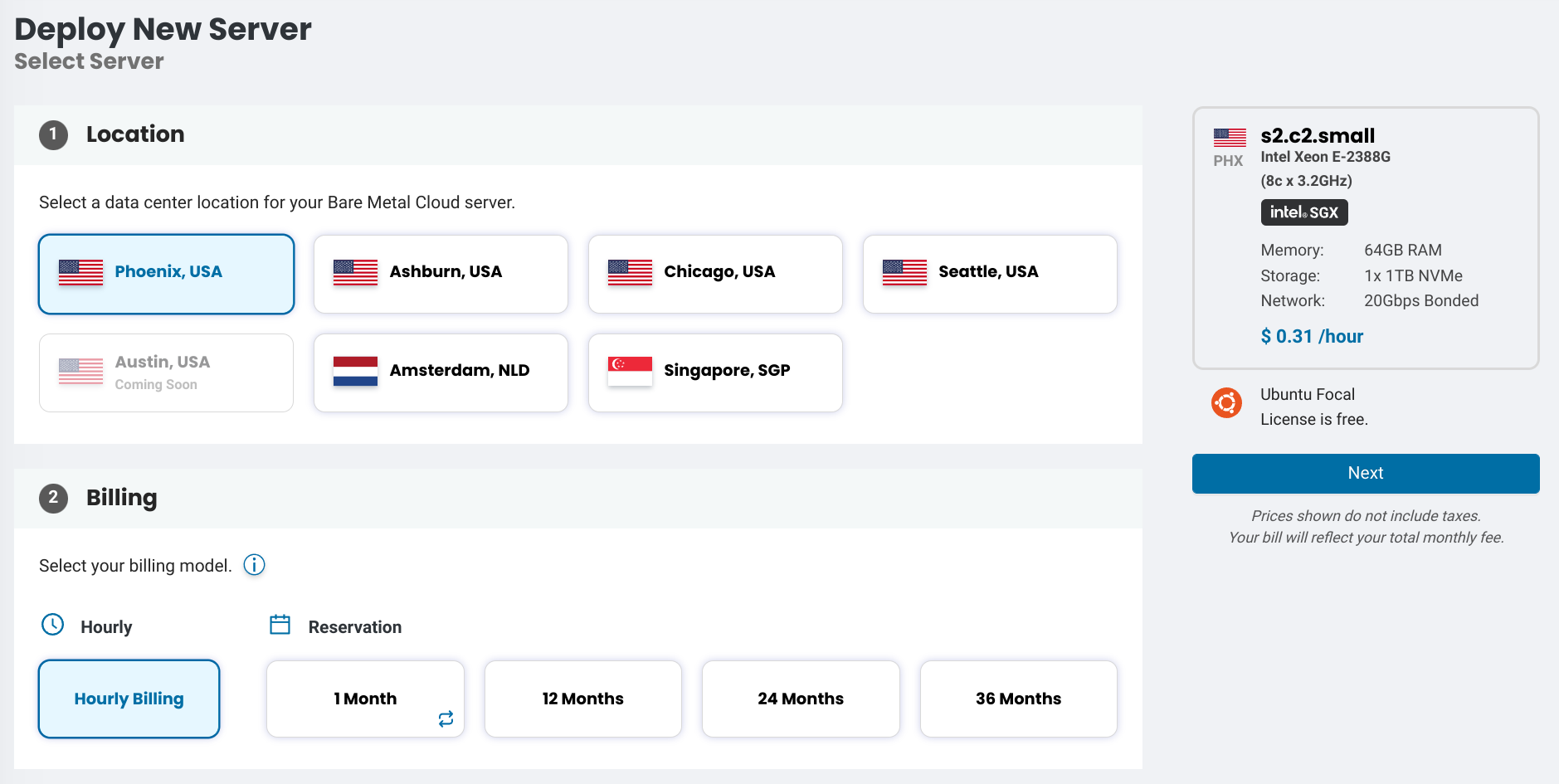

Create phoenixNAP Bare Metal Server

Login to the phoenixNAP BMC web console and click Deploy New Server. Select a location and Hourly Billing. This test will only bill per hour while the server is active, the server can be removed after a few hours of testing and no additionally billing will occur.

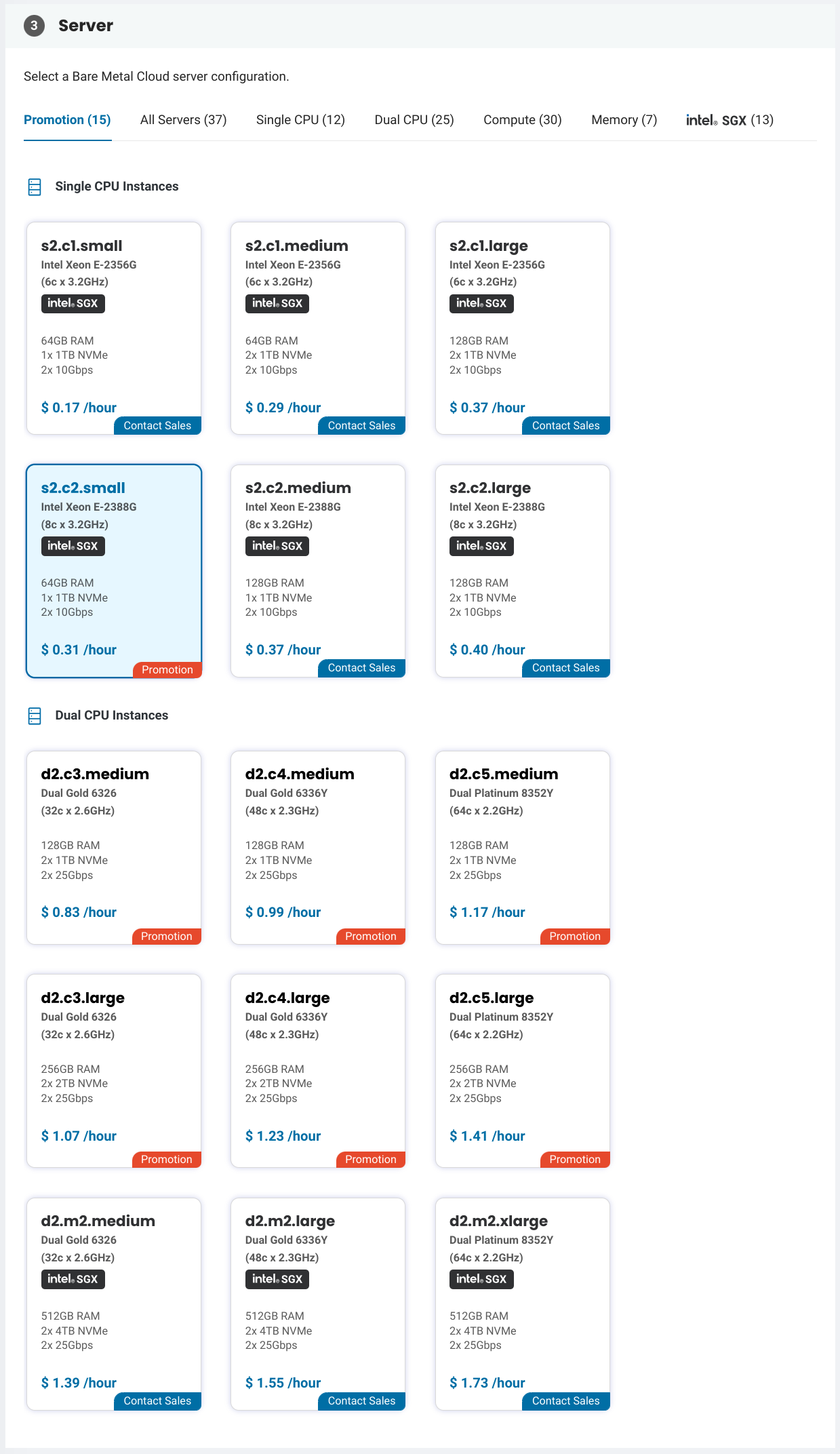

Select the instance size for the server. The phoenixNAP BMC allows quickly launching predefined server configurations with hourly billing. For a production configuration it is recommended to use the phoenixNAP Dedicated Servers which allows ordering servers with custom hardware configurations. A custom iso can also be provisioned on the server to install the operating system with more advanced configurations such as software RAID and full disk encryption.

Install Pritunl Cloud

Connect to the server and run the commands below to update the server and download the Pritunl Cloud Builder. Check the repository for newer versions of the builder. After running the builder use the default yes option for all prompts.

sudo apt update

sudo apt -y upgrade

wget https://github.com/pritunl/pritunl-cloud-builder/releases/download/1.0.2653.32/pritunl-builder

echo "b1b925adbdb50661f1a8ac8941b17ec629fee752d7fe73c65ce5581a0651e5f1 pritunl-builder" | sha256sum -c -

chmod +x pritunl-builder

sudo ./pritunl-builderRun the command sudo pritunl-cloud default-password to get the default login password. Then open a web browser to the servers IP address and login.

sudo pritunl-cloud default-passwordEdit the /etc/netplan/50-cloud-init.yaml on the server and find the section below with the network IP address configuration.

sudo nano /etc/netplan/50-cloud-init.yaml

vlans:

bond0.10:

addresses:

- 10.0.0.11/24

id: 10

link: bond0

mtu: 9000

bond0.11:

addresses:

- 123.123.123.82/29

- 123.123.123.83/29

- 123.123.123.84/29

- 123.123.123.85/29

- 123.123.123.86/29

gateway4: 123.123.123.81These additional IP addresses will need to be removed from the host network interface and added to Pritunl Cloud for use with virtual instances. All but the first IP address in the list will be removed. The first IP address must remain configured and will be used for the host.

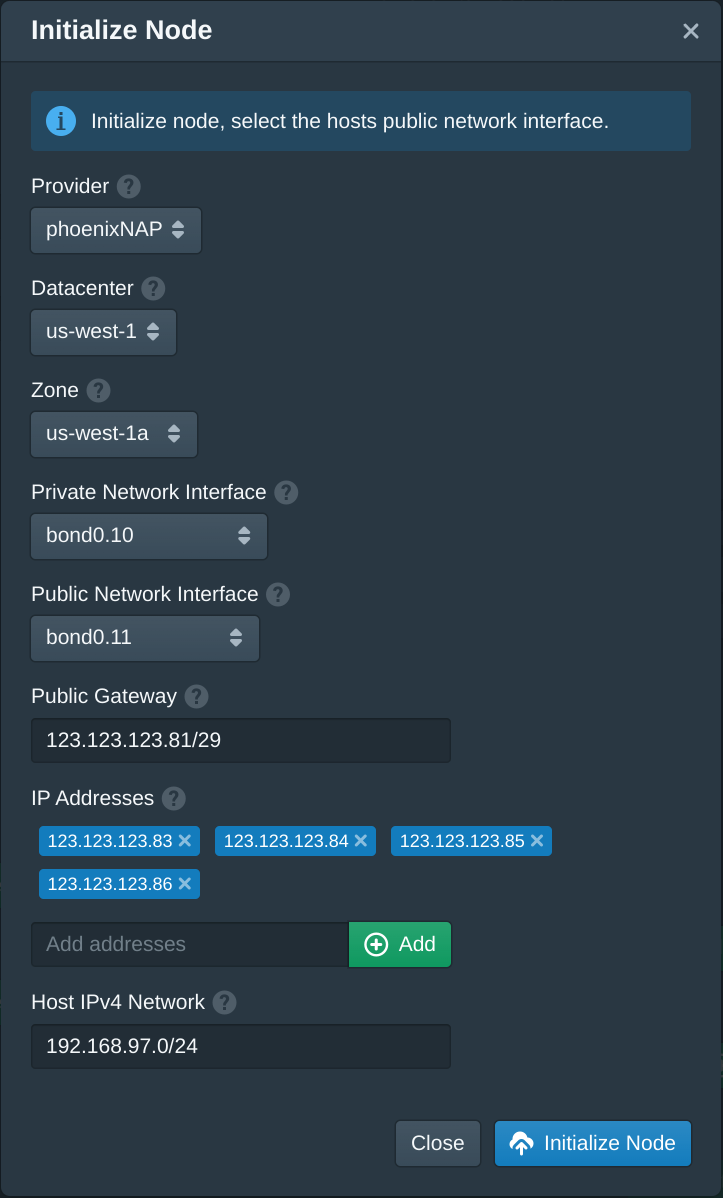

Open the Pritunl Cloud web console and click Initialize Node in the Nodes tab. Then set the Provider to phoenixNAP. Set the Datacenter and Zone to the first options available. These can be renamed later. In the configuration file above there are two network interfaces, in this example these are bond0.10 and bond0.11. Set the Private Network Interface to the interface name that is shown in the first network interface above. Set the Public Network Interface to the second interface name.

The IP addresses will have a CIDR prefix length, in this example it is /29. Copy the gateway4 address to the Public Gateway field and append the prefix length to this gateway address. For this example this will be 123.123.123.81/29.

Next copy all but the first IP address to the IP Addresses option. The /29 prefix length must be excluded when copying these IP addresses. For this example the IP addresses are 123.123.123.83, 123.123.123.84, 123.123.123.85, 123.123.123.86.

Due to the limited number of public IP addresses not every Pritunl Cloud instance will have a public IP. These public IP addresses should only be used for instances such as a web server. The other instances will remain NATed behind the Pritunl Cloud host IP address. To accomplish this Pritunl Cloud creates an additional host-only network that provides networking between the Pritunl Cloud host and instances. Pritunl Cloud uses a networking design with high isolation which places each instance in a separate network namespace. This design requires the use of an additional network to bridge this isolation. This host network is then NATed which will allow internet traffic to travel through this network provide internet access to instances that do not have public IP address. Instances that do have public IP addresses will route internet traffic using the instance public IP. The Host IPv4 Network will specify the subnet for this network. It can be any network and the same host network can exist on multiple Pritunl Cloud hosts in a cluster. The network does not need to be larger then the maximum number of instances that the host is able to run. In this example the network 192.168.97.0/24 will be used.

Once done click Initialize Node.

Once the node is configured the IP addresses need to be removed from the network configuration to allow Pritunl Cloud to use the addresses. Edit the /etc/netplan/50-cloud-init.yaml file and delete the lines containing the IP addresses that were added above. Only one IP address should remain as shown in the example below. Save and close this file.

sudo nano /etc/netplan/50-cloud-init.yaml

vlans:

bond0.10:

addresses:

- 10.0.0.11/24

id: 10

link: bond0

mtu: 9000

bond0.11:

addresses:

- 123.123.123.82/29

gateway4: 123.123.123.81Run the commands below to apply this configuration update which will remove the addresses from the interface. Then create the 99-disable-network-config.cfg file, this will prevent cloud init from overwriting the network configuration on reboot. Finally run ip address to verify the network interface no longer has the additional IP addresses.

sudo netplan apply

sudo tee /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg << EOF

network: {config: disabled}

EOF

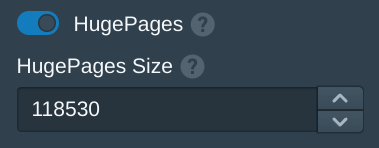

ip addressStatic hugepages provide a sector of the system memory to be dedicated for hugepages. This memory will be used for instances allowing higher memory performance and preventing the host system from disturbing memory dedicated for virtual instances.

To configure hugepages run the command below to get the memory size in megabytes. This example has 128gb of memory. Take the total number from below and subtract 10000 to leave 10gb of memory for the host. The host should be left with at least 4gb of memory, the rest of the memory will be allocated to hugepages which will be used for Pritunl Cloud instances.

free -m

total used free shared buff/cache available

Mem: 128530 1433 125442 2 1654 126182

Swap: 8191 0 8191In the Pritunl Cloud web console from the Hosts tab enable HugePages and enter the memory size with 10000 subtracted to configure the hugepages. In this example 118530 will be used to allocate 118gb for instance memory. Once done click Save. The system hugepages will not update until an instance is started. If the system has issues this may need to be reduced to allocate more memory to the system.

Launch Instance

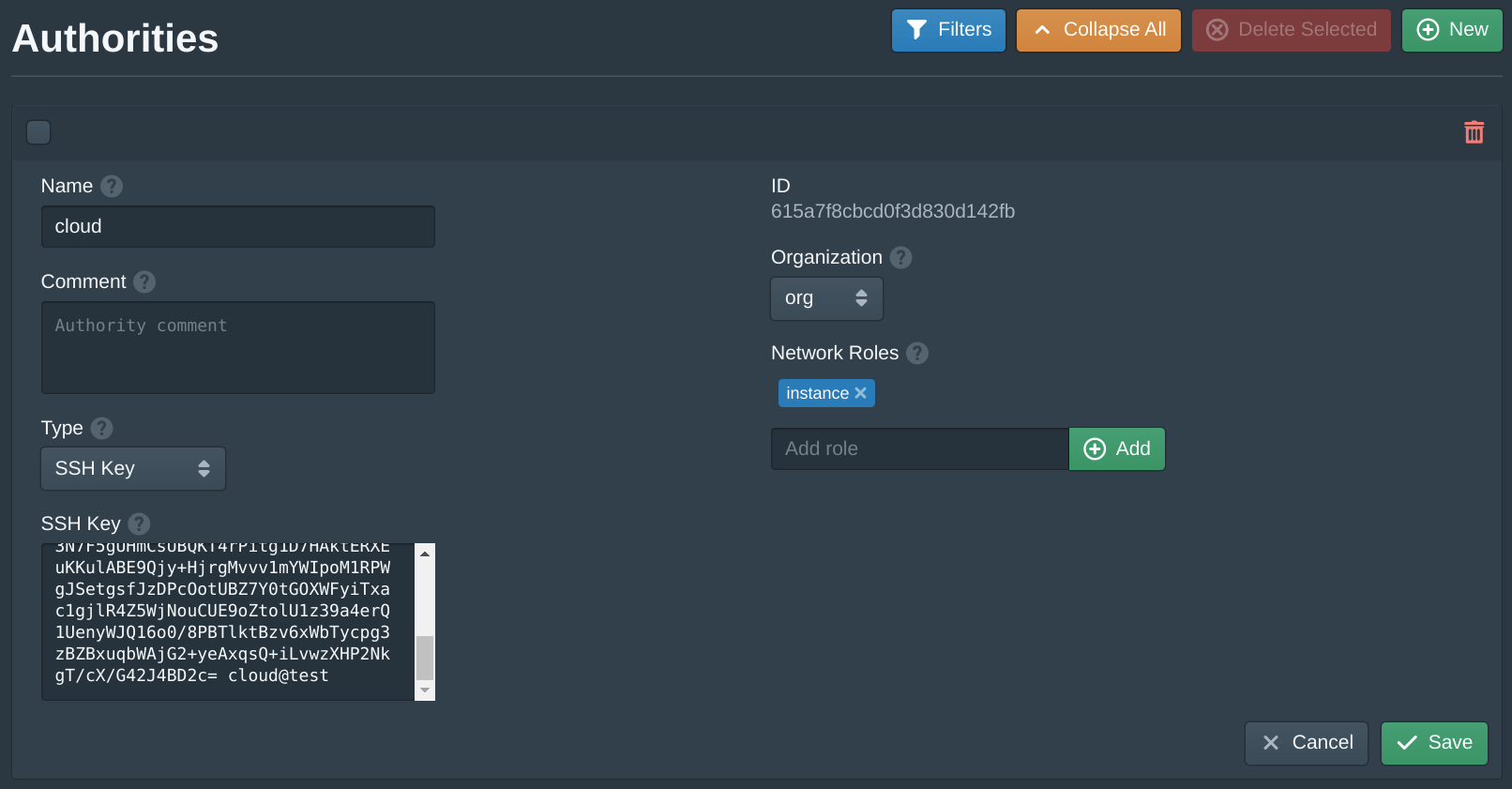

Open the Authorities tab and set the SSH Key field to your public SSH key. Then click Save.

This authority will associate an SSH key with instances that share the same instance role. Pritunl Cloud uses roles to match authorities and firewalls to instances.

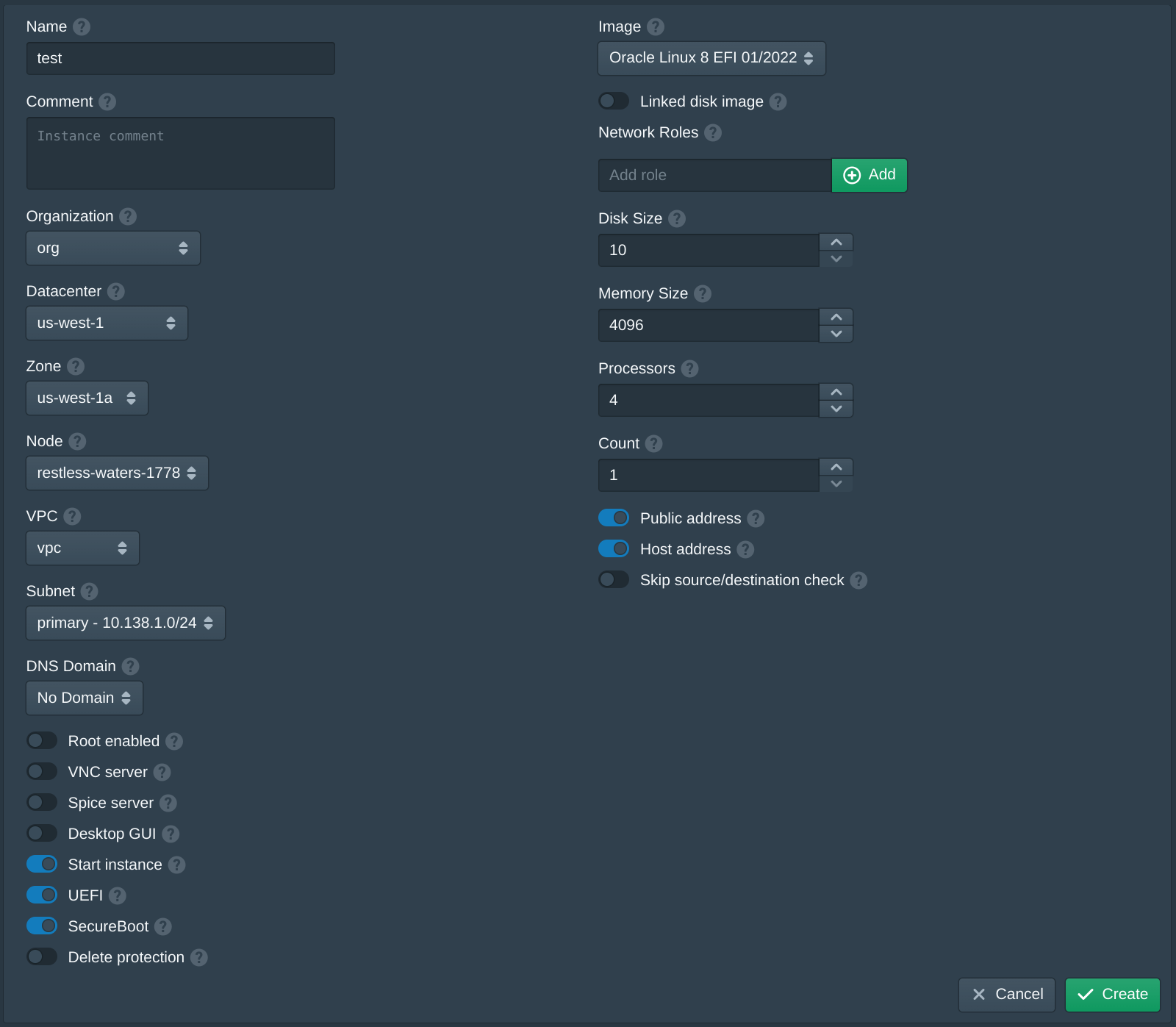

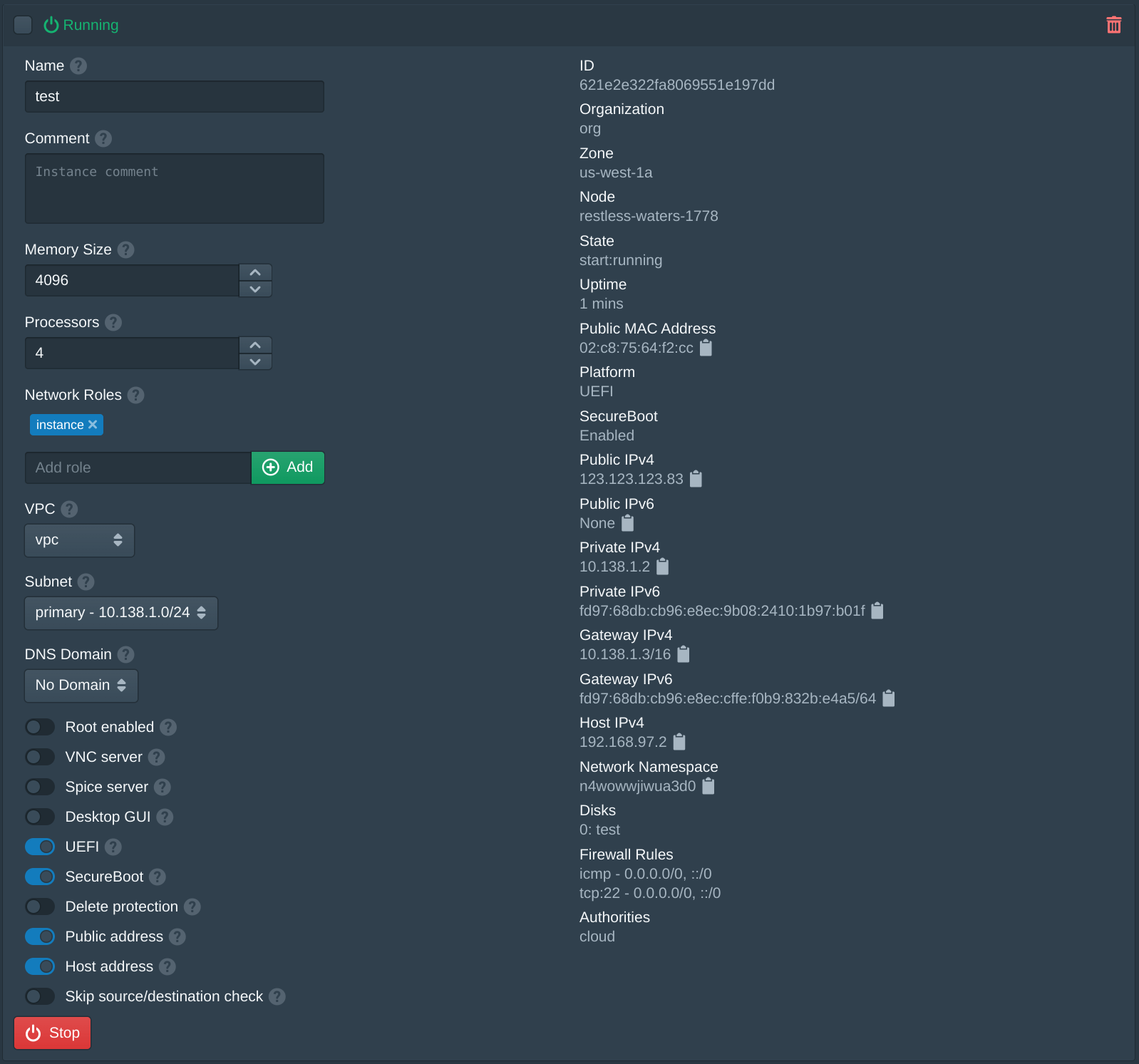

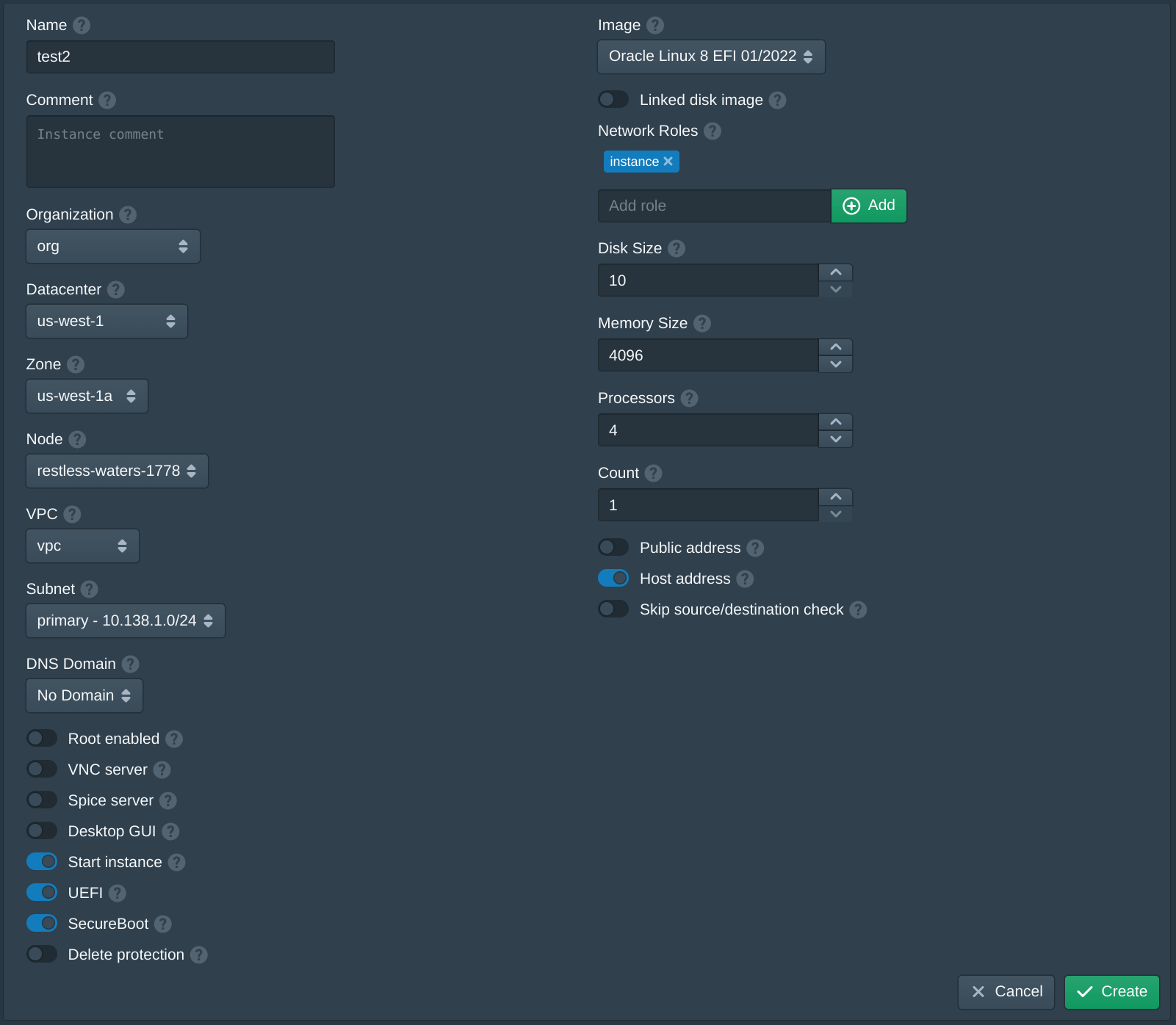

Next open the Instances tab and click New to create a new instance. Set the Name to test, the Organization to org, the Datacenter to us-west-1, the Zone to us-west-1a, the VPC to vpc, the Subnet to primary and the Node to the first available node. Then set the Image to latest version of Oracle Linux 8 EFI. Then enter instance and click Add to the Network Roles, this will associate the default firewall and the authority with the SSH key above. Then click Create. The create instance panel will remain open with the fields filled to allow quickly creating multiple instances. Once the instances are created click Cancel to close the dialog.

For this instance the Public address option will be enabled to give this instance a public IP. This can be disabled either when creating an instance or after an instance has been created to disable the public IP from the server.

If an instance fails to start refer to the Debugging section.

When creating the first instance the instance Image will be downloaded and the signature of the image will be validated. This may take a few minutes, future instances will use a cached image.

After the instance has been created the server can be accessed using the public IP address shown and the cloud username. For this example this is ssh cloud@123.123.123.83.

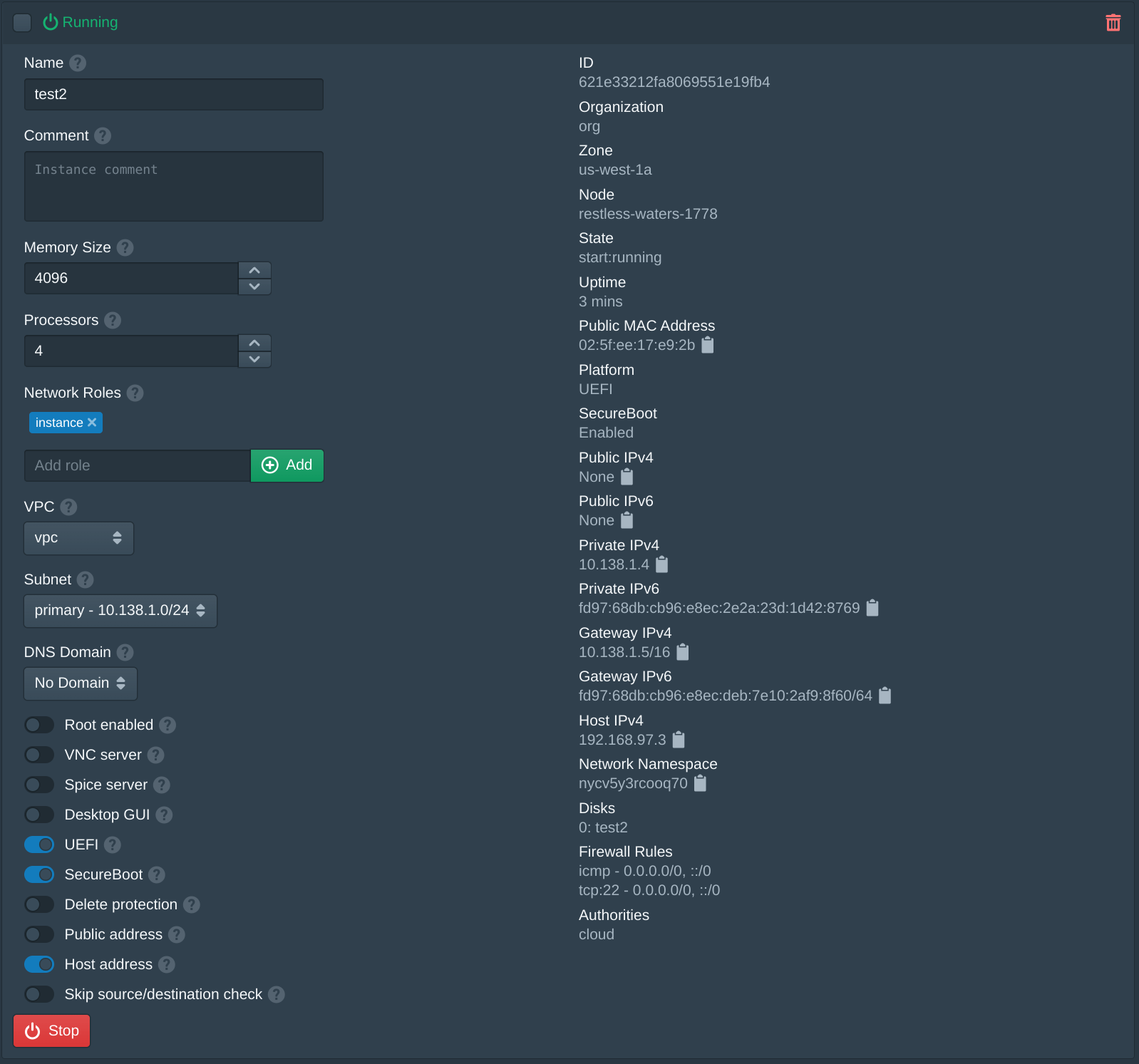

Next create an additional instance using the same options above with Public address disabled.

To access this instance an SSH jump proxy from the other instance to the instance Private IPv4 address shown below. For this example this is ssh -J cloud@123.123.123.83 cloud@10.138.1.4. This will first connect to the instance above and then connect to the internal instance using the VPC IP address. For additional network access a VPN server can be configured to route the VPC network. By default the firewall rules allow any IP address to access SSH, when configuring this the firewall will need to allow SSH access from the private IP address of the jump instance.

Optionally the public IP address of the Pritunl Cloud host can also be used as a jump server and connect to the Host IPv4 address of the instance. For this example this is ssh ubuntu@123.123.123.82 cloud@192.168.97.3.

Instance Usage

The default firewall configured will only allow ssh traffic. This can be changed from the Firewalls tab. To provide public access to services running on the instances either the built in load balancer functionality can be used.

To use the Pritunl Cloud load balancer DNS records will need to be configured for the Pritunl Cloud server. When a web request is received by the Pritunl Cloud server the domain will be used to either route that request to an instance through the load balancer or provide access to the admin console. In the Nodes tab once the Load Balancer option is enabled a field for Admin Domain and User Domain will be shown. These DNS records must point to the Pritunl Cloud server IP and will provide access to the admin console. The user domain provides access to the user web console which is a more limited version of the admin console intended for non-administrator user to manage instance resources. Non-administrator users are limited to accessing resources only within the organization that they have access to.

Updated 4 months ago